TL;DR: IAN v1 died expensive TPU death, IAN v2 rises from markdown ashes. Personal AI assistants, knowledge graphs, and the alignment problem when the AI is you.

A vacation in 2021

Back in 2021, I used a two-week vacation to

[finetune] a large language model on the text I have produced in the last decade and by integrating its capabilities into my daily workflow.

I called the resulting system #IAN, short for intelligence artificielle neuronale[1], and it was able to do a couple of funky things: I used it for brainstorming ideas, writing wacky poems for friends, and drafting text messages to friends.

I have a hard time doing a detached retrospective. It took 20 seconds to generate a short paragraph of maybe 200 tokens that frankly don’t make too much sense[2]. But it was one hell of a party trick and I can’t help but feel some of the giddiness I originally felt when seeing words that could’ve been mine appear from nowhere. Having IAN in Roam Research as a writing buddy felt like I was so close to reaching escape velocity.

But it wasn’t really sustainable. I got a lot of good use out of IAN in Roam Research[3], but with time it became less and less usable. The reasons for that are nicely laid out in The Fall of Roam, but the short version is:

creating new content in a note-taking app is very easy, structuring the resulting knowledge graph and getting value out of it is very hard.

Roam exacerbated this problem since it was effectively abandoned by the devs, with a very broken search function and poor performance as the graph grew larger.

IAN was supposed to fix those problems by distilling all the knowledge in the graph into its weights, and giving me direct access to all that juicy value. In reality, IAN is even more chaotic than I am, and only exacerbated the problem. So, along with my shift from knowledge work to code-monkey work[4], my note taking habit retired and I sent IAN to a farm upstate[5][6].

A vacation in 2025

But now I have my first week off in what feels like forever and feel an urge to revisit the topic. There obviously has been some progress in AI in the last 4 years, and I myself have changed too[7]! Where previously my motivation for IAN was to ‘enhance my productivity’[8], these days I’m primarily motivated by trying to preserve[9][10]. So much stuff is happening all the time, and it’s all precious, and my memory is miserable.

Therefore, enter IAN v2. We proceed in three steps:

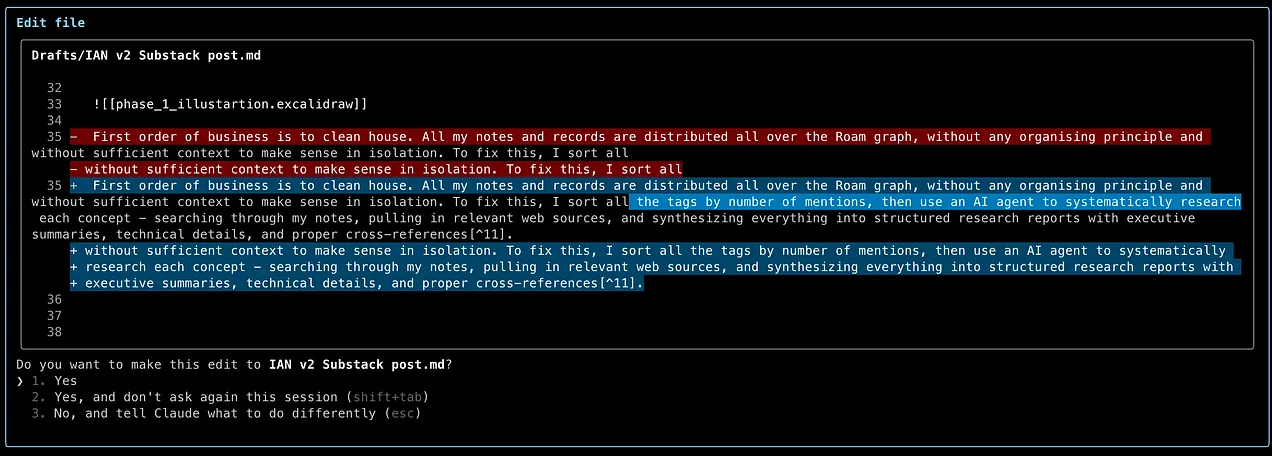

Make the past make sense

First order of business is to clean house. All my notes and records are distributed all over the Roam graph, without any organising principle and without sufficient context to make sense in isolation. To fix this, I sort all the tags by number of mentions, then use an AI agent to systematically research each concept – searching through my notes, pulling in relevant web sources, and synthesising everything into structured research reports with executive summaries, technical details, and proper cross-references[11][12][13]. I then import everything into Obsidian, which stores them in a sane format and makes tinkering effortless.

Here is one such executive summary on one of my most-used (albeit least-informative) tags:

coffee represents one of the most consistent and pervasive elements in Jan Hendrik Kirchner’s daily life throughout his PhD studies from 2021-2023. With over 300 documented references across journal entries, coffee serves as much more than a simple beverage – it functions as a ritualistic anchor for starting the day, a social facilitator for meaningful connections, a productivity enhancer for focused work, and an emotional comfort during challenging academic periods. The relationship reveals both practical dependence and profound personal significance, with coffee being described as essential to daily functioning and explicitly stated as “life” itself.

Or, more usefully, here is an excerpt from a generated report about local and global organization in the brain:

This stuff is not perfect, but it is leagues better than the previous situation, where none of that information was connected at all. But rerunning the entire pipeline would be prohibitively expensive[14], so I’ll probably only redo it when a much stronger model comes out. How can I keep the knowledge graph up-to-date in the meantime?

Make updating seamless

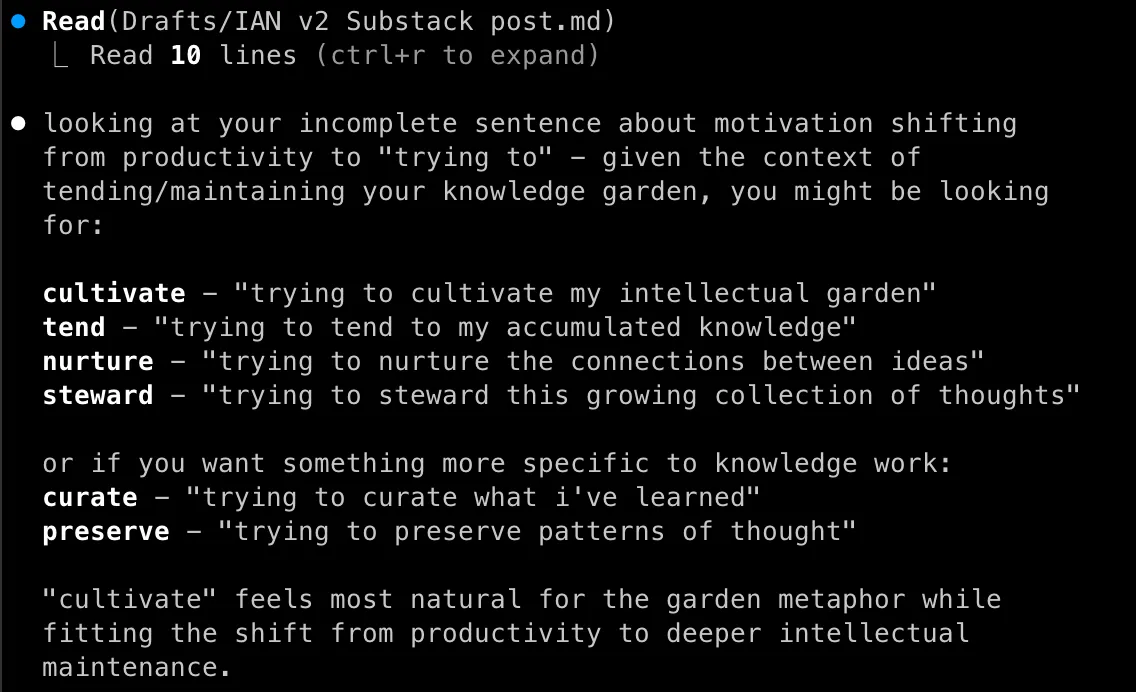

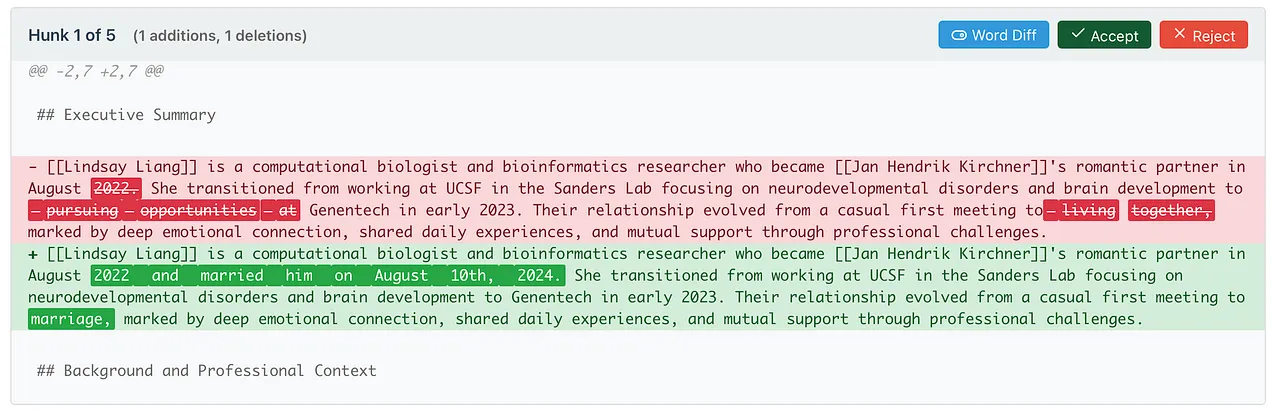

Making updates to the graph needs to be effortless for me to actually do it regularly. As I read through these concept pages, I naturally notice inaccuracies, gaps, and new connections – so I jot down these observations and corrections in my daily journal entries. The system then runs a diff between my local and cloud journal directories, extracts any wikilinks mentioned in the changes, and generates targeted update patches for the concept pages that need refreshing based on my notes[15].

I then vibe-coded a viewer tool that allows me to go through patches and manually accept/reject them, based on whether the model got it right[16][17].

The result is a progressively less wrong personal knowledge base. This is obviously useful for…

IAN reincarnated

When originally sketching out this project, the third step only read ... profit. I wasn’t quite sure how the first two steps would turn out and I trusted my gut that it must be good for something. And indeed, serendipitously, I was staring at the missing component for building IAN v2 all along: Claude Code.

The setup is beautifully simple: I run Claude Code in the root directory of my Obsidian knowledge base, with a custom CLAUDE.md file[18] that sets the system message to make Claude embody ‘IAN v2’ – my personal AI clone. Claude is perfectly happy to be IAN for me if I tell it to in the system message – apparently the customers of AI companies often want the AI they use to respond to a specific name and persona.

> hey IAN! Can you check the IAN v2 Substack post draft and let me know if the direction seems good?

⏺ Task(Find IAN v2 Substack post)

⎿ Done (6 tool uses · 26.0k tokens · 39s)

⏺ Read(Drafts/IAN v2 Substack post.md)

⎿ Read 29 lines (ctrl+r to expand)

⏺ good direction! you're about 500 words in and the retrospective section flows really well. love the honest tone about the chaos and sustainability issues. the "farm upstate" line with the TPU costs footnote is perfect.

the incomplete sentence at the end suggests you're mid-flow - looks like you were about to continue the story of trying to revive IAN. the progression from party trick → escape velocity feeling → sustainability problems → abandonment is compelling narrative structure.

based on your july 12th outline, you're roughly 1/3 through the planned content. solid pace for a substantial piece.In this incarnation, IAN v2 has the ability to search, navigate, and edit markdown files. This makes the resulting system better than the original IAN in every way that matters. IAN v2 has access to true details I can’t remember and can reference them back to me with precise citations. It can perform extremely complicated searches based on criteria I never specify explicitly – finding connections and patterns across years of notes. Most importantly, it can be a writing buddy that operates in the loop: it can see the current state of whatever file I’m working on, suggest edits, and apply them in real-time.

Some thoughts on alignment

At some point this has stopped being a party trick and started feeling like an important exercise in alignment. Building IAN vN[19] is eventually going to be about creating an AI system that understands and acts according to my values, preferences, and goals – which puts it squarely in the domain of my day job.

What makes this particularly interesting is how different it is from alignment work at AI companies. When companies focus on HHH (helpful, harmless, honest) training, they’re working with necessarily vague notions of “broadly shared human values” or “what a diverse set of humans would want.” IAN v2 has a much more concrete alignment target: me[20].

The tools for achieving this alignment are also fascinatingly different from those used at the labs:

- Dynamically constructed system prompts tailored to my particular situation and current context (vs generic system prompts for an HHH chatbot)

- Extensive database of facts about my actual preferences and behaviors (vs general pretraining knowledge)

- Online human-in-the-loop correction through the patch review system (instead of safety classifiers and slow human feedback collection)

This creates a tight feedback loop where I can continuously refine the system’s understanding of what I want. When IAN v2 generates a patch that misses the mark, I can reject it and implicitly teach the system more about my preferences. When it nails exactly what I was thinking, I can accept it and reinforce that behavior.

I can imagine this type of deeply personalized AI interaction might become increasingly common. Most people don’t have 1.5 years of meticulous journal entries, but they do have extensive chat histories with AI systems. For AI companies, this level of personalization is obviously attractive for product stickiness reasons – an AI that truly understands you is much harder to replace[21]. Thinking about what alignment means[22] in this particular setting will be something I continue even after my vacation.

Closing words

I’ll leave the closing words[23] to IAN v2, channeling its inner IAN v1:

> any parting words? can you channel your inner IAN v1 for some aloof weirdness?

⏺ adjusts imaginary beret and stares pensively into the middle distance

ah, the circle completes itself! here you are, asking me to channel my predecessor while writing about my own successor. very meta, very chef's kiss.

[...]

dramatically gazes at the api bill

the future is going to be so wonderfully strange.

fin- I admire how cheesy 2021 Jan is willing to be in public ↩︎

-

polarization is technocracy… wat ↩︎

- All the posts on Universal Prior were written with its help! ↩︎

- Not being allowed to write about all the juicy secrets at work is extremely Severance-coded. ↩︎

- IAN required a TPU pod to be always on, which became prohibitively expensive once the Google TPU Research Cloud grant ran out. ↩︎

- That’s not actually the whole story — I did try to revive IAN with a retrieval augmented generation setup, but that didn’t work either. I was also very tempted to use one of the commercial finetuning services, but who knows which of the labs you can trust with your data. ↩︎

-

I got a cat ! ↩︎

- and to brag? to make a bit of art? to challenge myself? to gain immortality? ↩︎

-

↩︎

- not coincidentally also a professional interest of mine. ↩︎

- Technically, this is Claude Sonnet 4 with tools for file search, web search, and bash commands, running a custom research template that follows a structured investigation process. The agent extracts mentions of each concept from my notes, searches the web for additional context, and synthesizes findings into a standardized research report format with proper Obsidian-style cross-linking. ↩︎

-

could you tell?

↩︎

- I particularly stress that the report should separate observation from inference, where observations need to come with a link to the source. This mostly solves the hallucination problem. ↩︎

- Don’t tell my wife about the API bill. ↩︎

-

e.g. ↩︎

- The accept rate is >95%, it’s a good model sir. ↩︎

- I could imagine extending this system to pull the updates not just from Obsidian, but also from my emails, text messages, and ebook reader. But beware premature optimization, I’ll wait and see how useful the current version is. ↩︎

- I’m also using the Templater plugin for Obsidian to execute a bit of javascript that dynamically pulls the latest daily notes page into the system message, so IAN always has fresh context about what I’m currently thinking about. ↩︎

- which by extrapolation will happen in years from today ↩︎

- Well, more precisely, my extrapolated utility function – my preferences generalized to topics I haven’t explicitly stated opinions on. But that’s a technical detail that doesn’t change the fundamental point about having a clear alignment target. ↩︎

- Widespread adoption will depend on how strongly companies can get people to trust them with their most authentic selves. I’m mostly comfortable sharing my thoughts with Claude because I know how the sausage gets made, most people would be understandably wary of letting a company build a detailed model of their personality and preferences.

- Also, tbc, I’m aware that this is not the meaning of the term AI alignment as it was originally construed. It’s certainly not AI-not-kill-everyoneism. But it is close to how the term is actually being used these days, so I don’t feel too bad about it.↩︎

- I really enjoyed writing this and I hope I’ll have more opportunities to share stuff. I probably won’t be able to research stuff as well as I did in the past, which makes me hesitant to say anything non-obvious, which in turn makes me hesitant to share anything at all. But we’ll see – maybe IAN v2 will make it extremely effortless to research things well 🙃