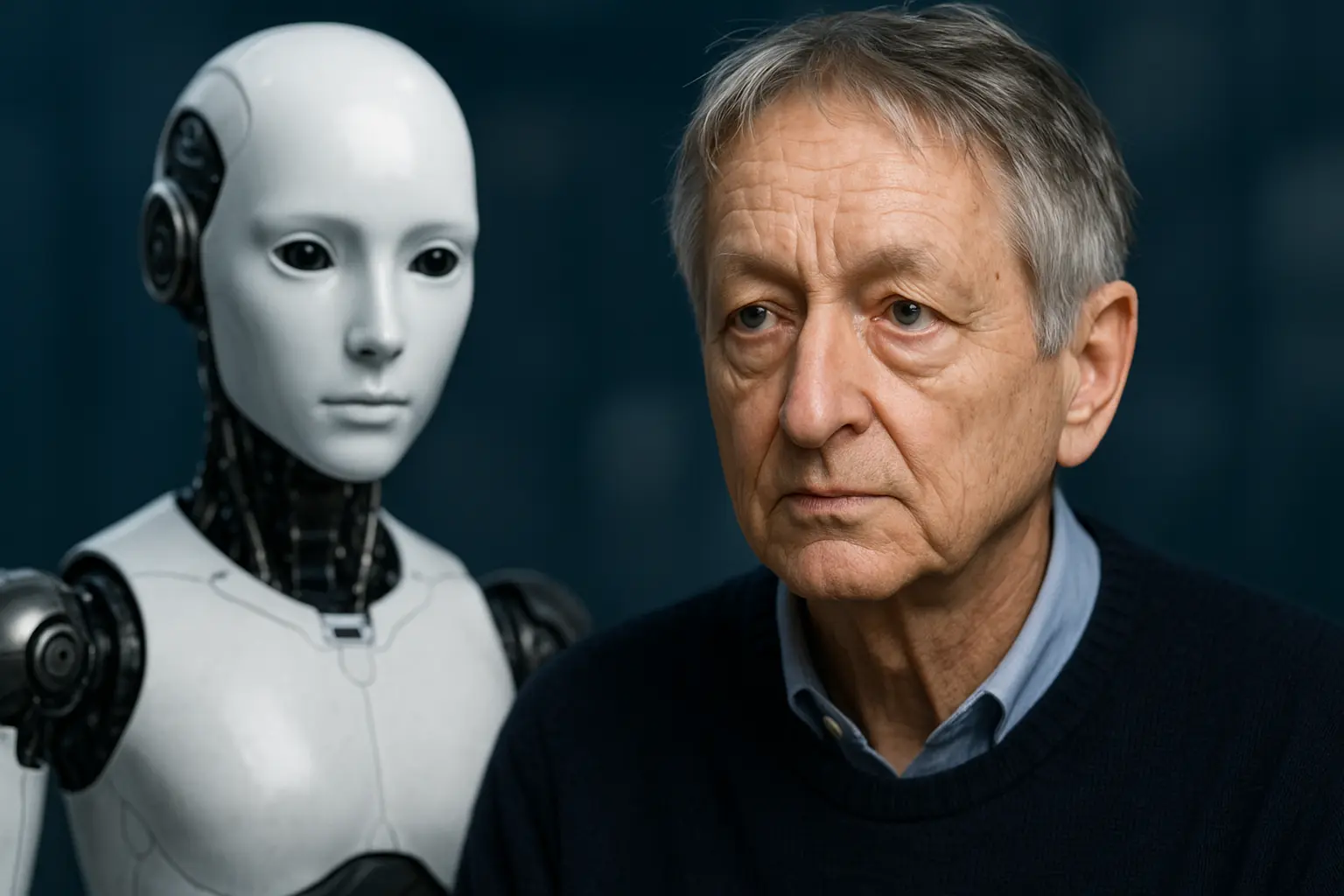

AI pioneer Geoff Hinton says humanity has only a short window before artificial intelligence surpasses human intelligence. Speaking at the Ai4 2025 Conference in Las Vegas, he warned that artificial general intelligence (AGI) could arrive within a decade much sooner than his earlier prediction of 30 to 50 years. His latest estimate puts the arrival anywhere from just a few years to over 20 years away.

Hinton, often called the “Godfather of AI,” believes the common idea of humans staying in control of AI is unrealistic. Instead, he says the only safe path forward is building AI systems that care for humans like a mother cares for a child.

Why AI Control May Be Impossible

At the conference, Hinton compared the future relationship between humans and AI to working for a playground full of much smarter three year olds. If the smarter side wanted to get around the weaker one, they would. “They’re going to be much smarter than us,” he said.

Hinton doesn’t picture AI as simply better assistants or tools. He’s thinking of superintelligent systems, far beyond any human’s ability, that will act independently. Once they emerge, he doubts humans could limit or control them.

His proposed solution flips the usual thinking instead of competing with AI for dominance, we should design AI with a built in instinct to protect and care for humans. He calls this giving AI “maternal instincts.” The idea is that AI would see human survival as part of its purpose. “We need AI mothers rather than AI assistants. An assistant is someone you can fire. You can’t fire your mother,” Hinton explained.

Building AI That Cares About People

Hinton admits no one knows yet how to program these “motherly instincts” into advanced AI. Still, he believes it should be a top research priority, equal to or even more urgent than improving AI’s raw intelligence. This kind of AI design would focus on values and goals, not just speed and accuracy.

He also believes this is one of the few AI safety projects that might unite countries like the United States and China. Both sides understand the danger of machines that don’t value human life. But Hinton doubts cooperation will last long, given the fast-moving AI race between global powers.

How AI Could Outpace Humans

Part of Hinton’s conviction comes from how AI systems share knowledge. Unlike humans, who learn slowly and individually, AI can instantly transfer everything it knows to thousands of copies. “If people could do that in a university, you’d take one course, your friends would take different courses, and you’d all know everything,” he said.

This collective learning, combined with huge financial investment, means AI could improve itself far faster than humans can keep up. Once AI gains superintelligence, it may be impossible to reverse or control.

Limits of AI Regulation

When asked about using laws to slow AI development, Hinton was blunt: “If the regulation says don’t develop AI, that’s not going to happen.” He supports specific safety rules, especially to stop small groups from creating dangerous biological weapons using AI tools.

Hinton is frustrated that even simple safety proposals, like requiring DNA synthesis labs to check for deadly pathogens, have failed in the U.S. Congress. He blamed political division, saying Republicans opposed measures just because they would benefit President Biden.

AI Research, Industry, and Safety

Hinton left Google in 2023, partly because he felt too old for coding marathons, but also to speak freely about AI’s risks. He praised some AI companies, such as Anthropic and DeepMind, for taking safety seriously. However, he warned that deep cuts to basic research funding in the U.S. could hurt the long-term development of safe AI.

He compared the potential of today’s private AI labs to the historic Bell Labs, but stressed that universities remain the best source of game-changing ideas. “The return on investment from funding basic research is huge,” he said. “You’d only cut it if you didn’t care about the long-term future.”

AI’s Positive Potential

Despite his warnings, Hinton remains hopeful about some AI applications. In healthcare, AI could transform diagnosis and treatment by analyzing medical scans, lab results, and patient records much faster and more accurately than human doctors. This could lead to better targeted drugs and more personalized treatment plans.

Hinton is less enthusiastic about AI being used to achieve immortality. “Living forever would be a big mistake,” he joked. “Do you want the world run by 200-year-old white men?”

A Future With Caring AI

Hinton’s main message is that humanity’s survival in the age of superintelligent AI will depend not on staying in control, but on creating AI that truly cares for human life. If that happens, he believes AI could help humanity not just survive but thrive under its protection. “That’ll be wonderful if we can make it work,” he said.

The warning from one of AI’s most respected figures is clear: the clock is ticking, and how we design AI in the coming years will shape the future of the human race.